The Era of Agent Workflows begins on June 1

Join us to supercharge your enterprise with agent workflows.

Enterprise solutions, at the ready

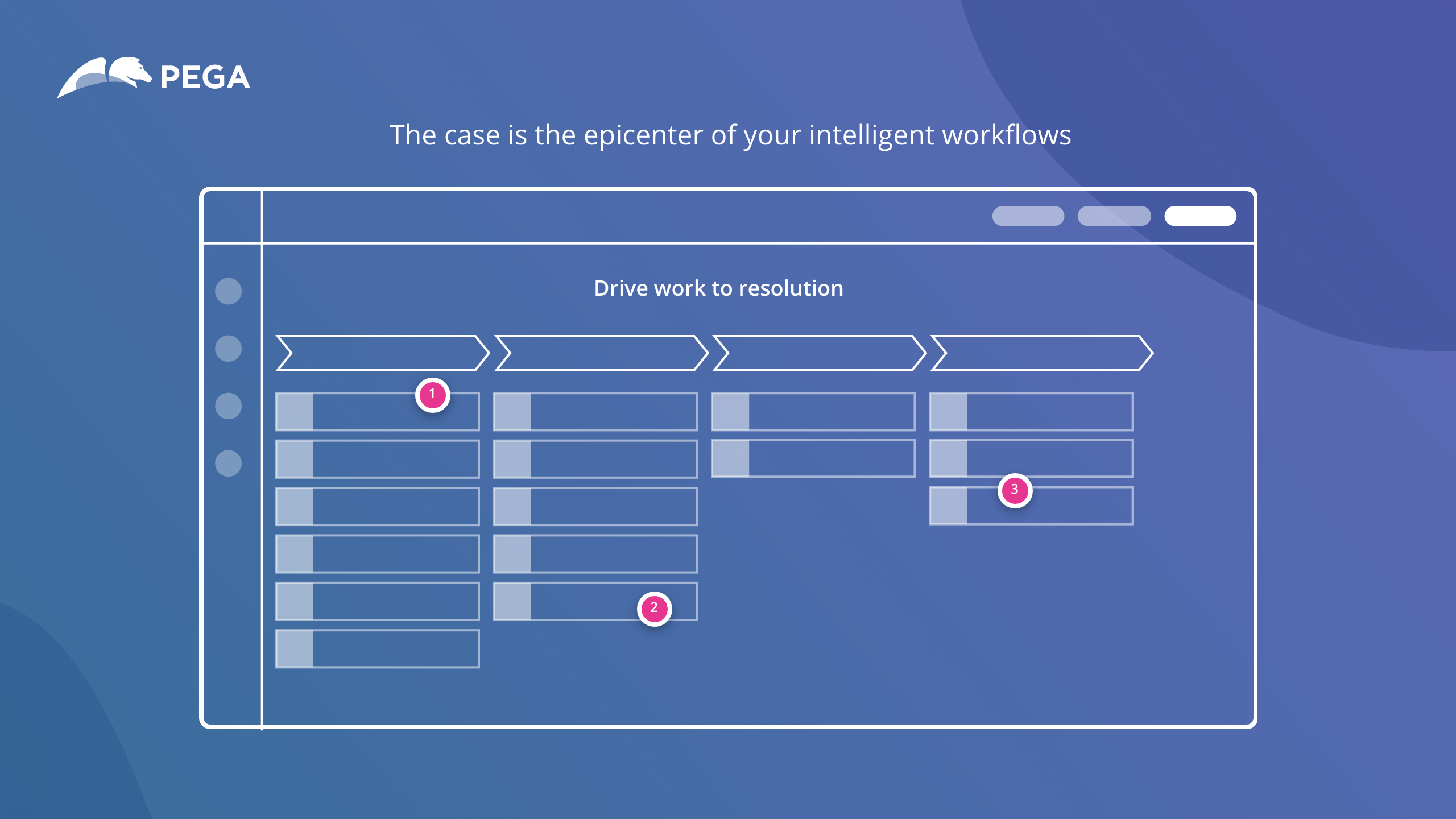

Pega is architected to run your most critical journeys. Explore our key solutions:

Enterprise solutions hand-picked for you

Tell us your specific challenges. We’ll recommend the best tech to help you achieve incredible outcomes.

Have an idea? Build it now, instantly.

Set your vision and see your workflow generated on the spot. Live edit and import right to Pega. Pega Blueprint just revolutionized app design. Are you ready?

Ford redefines the EV customer journey

With Pega, Ford overhauled their test drive booking system, empowering dealerships and enhancing customer satisfaction by 30%. See how Ford is setting new standards in the EV industry.

“Customer experience and trust are intrinsically linked. So we need to make sure that we are a trusted company, and we can do that through our customer experience.”

Recommended for you

Learn why we’re

The enterprise transformation company™

Unlock future-defining outcomes on the only platform with enterprise evolution built right in.